Deep neural networks have shown impressive performance in a variety of domains including vision, NLP, and natural sciences. However, there is still a lack of trust over their safe and reliable deployment in safety-critical applications such as autonomous driving, medical diagnosis, and finance which hinders their real-world applicability. Constructing models that are both accurate and achieve the desired level of trustworthiness requires solving several hard fundamental problems beyond the reach of existing methods.

In this tutorial, we will discuss some of the recent work in the community on building state-of-the-art trustworthy AI models with provable safety and correctness guarantees. These methods combine classic ideas from formal methods such as abstractions and solvers with techniques used to create modern AI systems (e.g., optimization, learning). We will also discuss several open problems, the solutions of which would substantially advance the field of trustworthy AI.

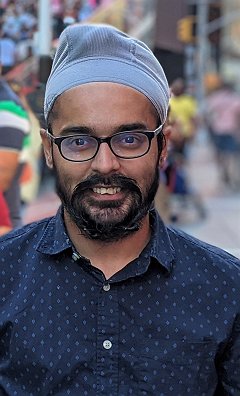

I am a tenure-track Assistant Professor in the Department of Computer Science at the University of Illinois Urbana-Champaign (UIUC). My research lies at the intersection of artificial intelligence (AI) and programming languages. My long-term goal is to to construct intelligent compute systems with formal guarantees about their behavior and safety.

Mon 17 JanDisplayed time zone: Eastern Time (US & Canada) change

15:00 - 16:30 | |||

15:00 90mTutorial | Formal Methods and Deep Learning [Part B]InPerson TutorialFest Gagandeep Singh University of Illinois at Urbana-Champaign; VMware | ||